This is cross-posted from the WorkWhile engineering blog.

This post describes a zero-downtime schema change to a high contention table — guided (and greatly accelerated) by ChatGPT.

This is cross-posted from the WorkWhile engineering blog.

This post describes a zero-downtime schema change to a high contention table — guided (and greatly accelerated) by ChatGPT.

It turns out it's quite straightforward to construct an "almost unique" constraint in PostgreSQL via

EXCLUDE.

I was recently stuck in a jam in our PostgreSQL database during a feature

migration. We needed a "partial UNIQUE" constraint and I was surprised

to find out that PostgreSQL doesn't support them1 …

This post was included in Golang Weekly issue 499. This is cross-posted from the Hardfin engineering blog.

The Go standard library uses a single overloaded type as a stand-in for both full datetimes1 and dates. This mostly "just works", but slowly starts to degrade correctness in codebases where both …

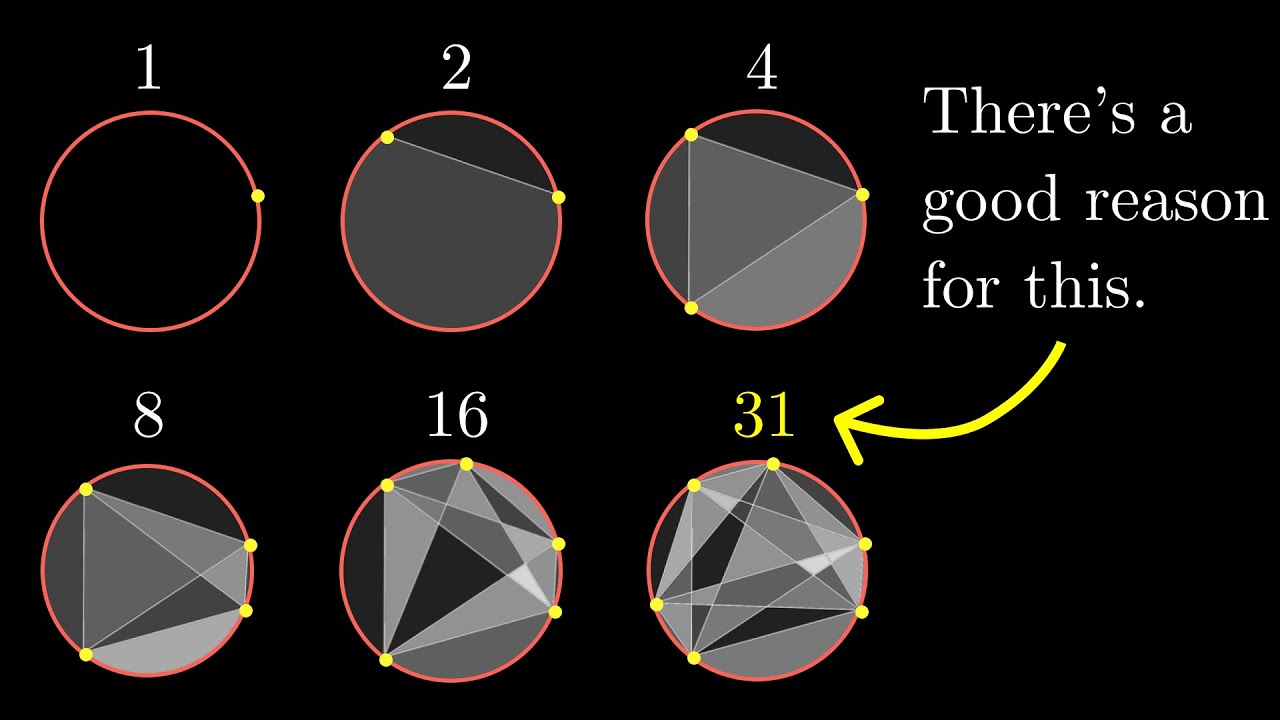

Below, we'll show two facts which help understand the positive integer solutions to the following diophantine equation:

We'll show that there is a lone positive root and …